Transcoding is one of the fundamental pillars that define the quality of the watch experience for an OTT platform such as ours. The efficient transcoding of videos is crucial for handling the large volume of daily content ingestion, which can range from hundreds to a few hundred hours, including several tens of hours of 4K content. At the Video Center of Excellence @ Disney+ Hotstar, we are on a mission to redefine encoding and to deliver the best quality video at most reasonable costs with the best in-class video experience.

Over the years, the Disney+ Hotstar Video Center of Excellence has proposed and integrated a more innovative way of processing more content in a shorter period while being leaner on the wire. Such improvements have led to smoother launches in many countries with thousands of new content to process. This article discusses how we upped the transcoding game over the last year, solving critical problems in scaling up transcoding horizontally and vertically without incurring huge costs.

The goal of the transcoding pipeline at Disney+Hotstar is to deliver more content to the platform in less time at optimal quality and a possibility to have a point of recovery in case the transcoding of content fails. Furthermore, a massive amount of resources is required to process longer duration 4K movies. The memory may reach 70–100GB, and CPUs up to 36–48 vCPUs due to high source bitrates up to 1Gbps. Despite this, the process can take 10–15 times the film’s length to produce renditions fit for OTT delivery.

We can tackle them with the following options:

- Vertical scaling with more threads and memory (bad for quality, no option to resume): Increasing the number of computing threads can usually have a negative impact on the quality of transcoded files because parallelism limits references for predictions during compression. In addition, this does not solve the problem of having to restart from the beginning in case of failure.

- Horizontal scaling with chunked encoding + optimal threading + stitching later: Processing content in small segments of 20–40s with many parallel compute resources can reduce TAT and also introduce a point of recovery for resumption if a single chunk fails.

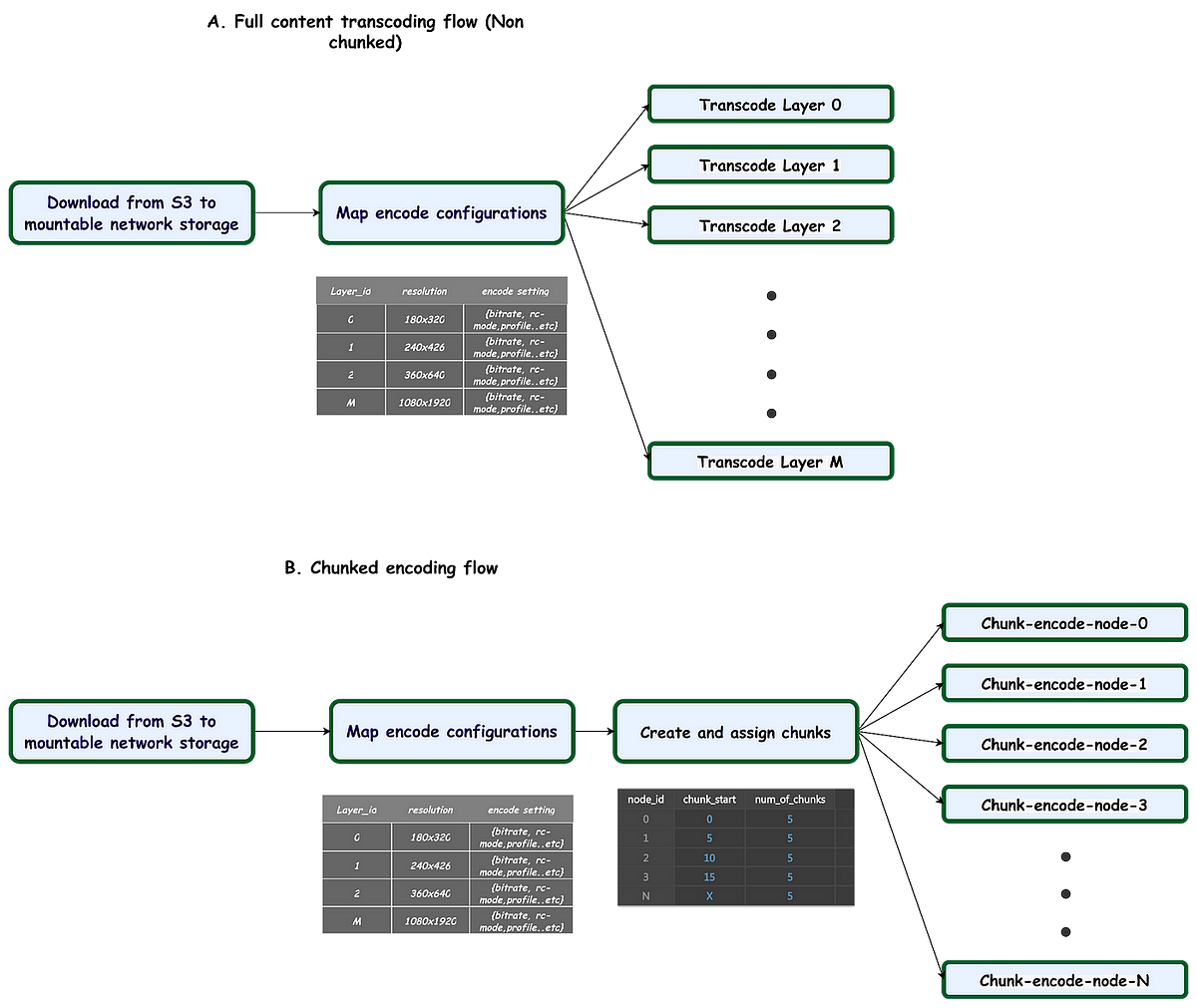

By introducing chunked encoding at Disney+ Hotstar, we made orders of magnitude improvements, and 4k transcoding was made possible within prescribed resource requirements and processing time. Fig 1 depicts a comparison of chunked and non-chunked encoding flow. In chunked encoding:

- We divide the entire input into smaller units (also known as chunks)

- Process them in parallel to shorten the transcode time and make it scalable.

- We adopted chunked encoding with constant chunk length to predict SLAs better.

While adopting chunked encoding, an extensive study on quality trade-offs and rate control limitations with chunk size was done as chunk boundaries would lose the context of scenes in the neighboring chunks while encoding.

Impact

We saw the TAT reduction by up to 2–4 times compared to full-length processing, as shown in Fig 2. Chunked encoding enabled 4k transcoding, especially for longer films like Avengers, without resource constraints or multiple retries. We limited the maximum chunk nodes per content to account for practical compute resource availability.

Hotstar serves daily a range of content ranging from daily soap episodes to premium content, which comes sporadically in a week. With Disney+ Hotstar launching in multiple countries across the globe, needed to also do bulk content processing of thousands of contents for seeding the catalog in those countries. Daily soap episodes need quick processing for streaming within hours. Though less time-sensitive, bulk content for new markets requires more elasticity due to high volume, stretching SLAs to weeks or months. Expected daily capacity covers the soap episodes while scaling pressures are more prominent for bulk content. At Disney+Hotstar, we introduced some important components to chunked encoding to help scale our transcoding pipeline elastically. They were:

- Storage and I/O optimization: We used to download the whole file first before starting the encoding process. It added significant lag before encoding could start, especially for longer duration and 4k titles. It also meant that the size of the whole source file needed to be maintained in network storage, introducing inefficiency for scaling.

- Scaling Compute resources for optimizing consistent SLA: The system of chunked encoding tries to fill all the available chunk nodes (maximum chunk nodes) with a round-robin kind of system. We had an opportunity to improve here by scaling according to the content duration to be cost-efficient.

The most significant impediment to elastic scaling in Disney+ Hotstar encoding was the amount of mountable network storage and I/O requirements at the content level. We downloaded the whole source file in chunked encoding and then started processing each chunk as represented in Fig 3A. It was simple in terms of engineering stability in the beginning. But as we wanted to scale and process over tens of thousands of contents for our new country launches, this would have posed a massive challenge in terms of storage and I/O requirements while scaling up our transcoding capacity. Furthermore, because their source files are massive, 4k was experiencing significant download delays in the existing pipeline before beginning encoding (up to 1TB).

We created a method to download the source data chunk by chunk, transcode it, and then remove the source chunk to scale up without using more resources and decrease 4K download latency.

Impact

The above technique saved us the network storage and I/O read count by up to 80%, as shown in Fig 4. We could transcode around 4 times more contents at a given time compared to before.

In Fig 5 impact on transcode TAT is shown. With resource optimization we see reduction in transcode time as well due to huge I/O reduction from network storage. In terms of computing cost reduction, we saved around 15% of computing per content cost due to lesser network storage requirements and a reduction in computing time (TAT). When we scaled up our encoding pipeline, we constantly encountered network storage I/O bottlenecks. However, the above resource optimization technique was a massive win for us in smoothly transcoding tens of thousands of contents and exceeding expectations in transcoding throughput during new launches.

Disney+ Hotstar releases all of the day’s episodes at once at a set time. Considering this publishing SLA of our daily episodes at Disney+ Hotstar, we aspire for collective publishing rather than individual episodes being available in advance. Also, we can give an episode of 30 minutes fewer compute nodes than a 2 hours movie for SLA optimization. So, instead of distributing chunks to a fixed number of compute nodes per content, scaling according to content duration helped us to achieve processing more contents efficiently to meet SLA. An example is shown in Fig 6. The advantages being

- Fewer pods are scheduled per content according to duration → no need to schedule compute resources saving money + opportunity to process more contents depending on duration.

- Less number of nodes required for shorter duration contents such as daily episodes → Efficient usage of cheaper instances (Eg.spot instances of AWS)

- Adding consistent throughput: We optimized max resources to one content vs. distributed resources to many contents. With our use case of publishing time of multiple contents simultaneously, content duration aware resource allocation works best.

The above strategy gave us more consistent delivery in daily episodes with a considerable reduction in manual effort. Content duration-aware resource utilization led us to use a higher percentage of cheaper instances saving around 10–15% of computing costs.

Overall, we are constantly pushing the state-of-the-art and innovating Disney+ Hotstar’s video processing and encoding capabilities to evolve and optimize transcoding beyond encode parameter tuning, scale transcoding pipelines with efficient resource utilization, and bring user delight with various content adaptive techniques.

By implementing chunked encoding, we expedited 4K transcoding by 2–4 times and, with further optimizations, reduced network storage by 80%, thereby quadrupling our capacity. This approach streamlined daily episode delivery, reduced manual intervention, and cut computing costs by 10–15%.

We intend to extend our transcoding optimization innovation and learnings to other aspects of complete content ingestion, such as packaging, manifest generation, and final upload of URLs and packaged artifacts.

Pumped up about solving such hard technology problems that make a huge difference to customer experience and set the future tone for content streaming? Check out: https://careers.hotstar.com/

#Innovations #highquality #transcoding #Hotstars #tale #10x #scale #madhukar #bhat #Jun